Private Multiparty Perception for Navigation

Abstract

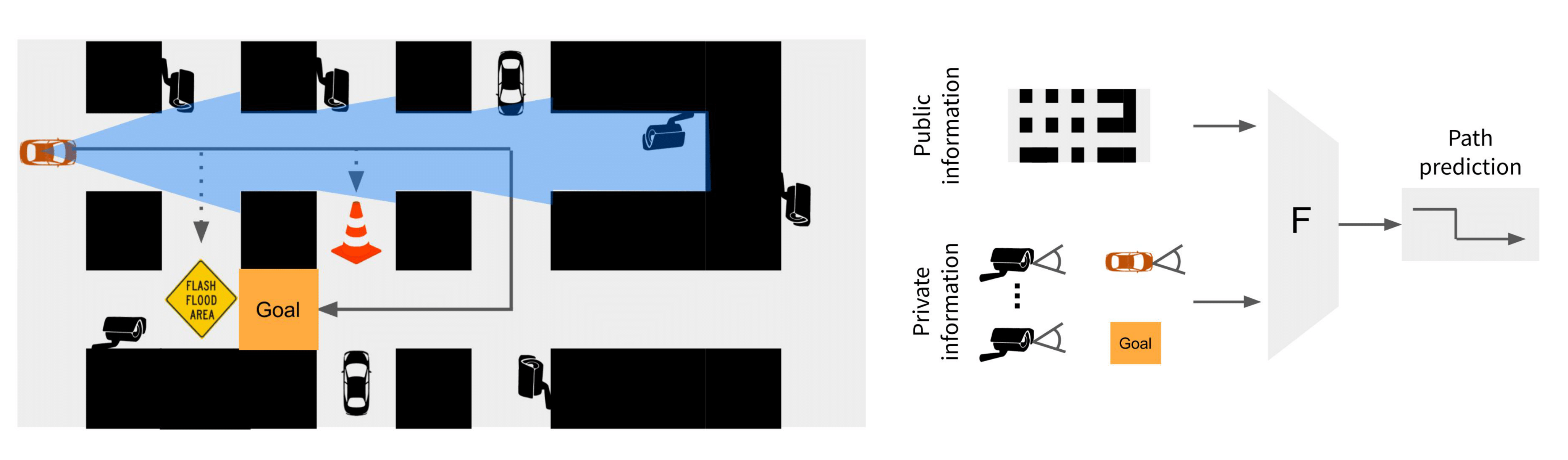

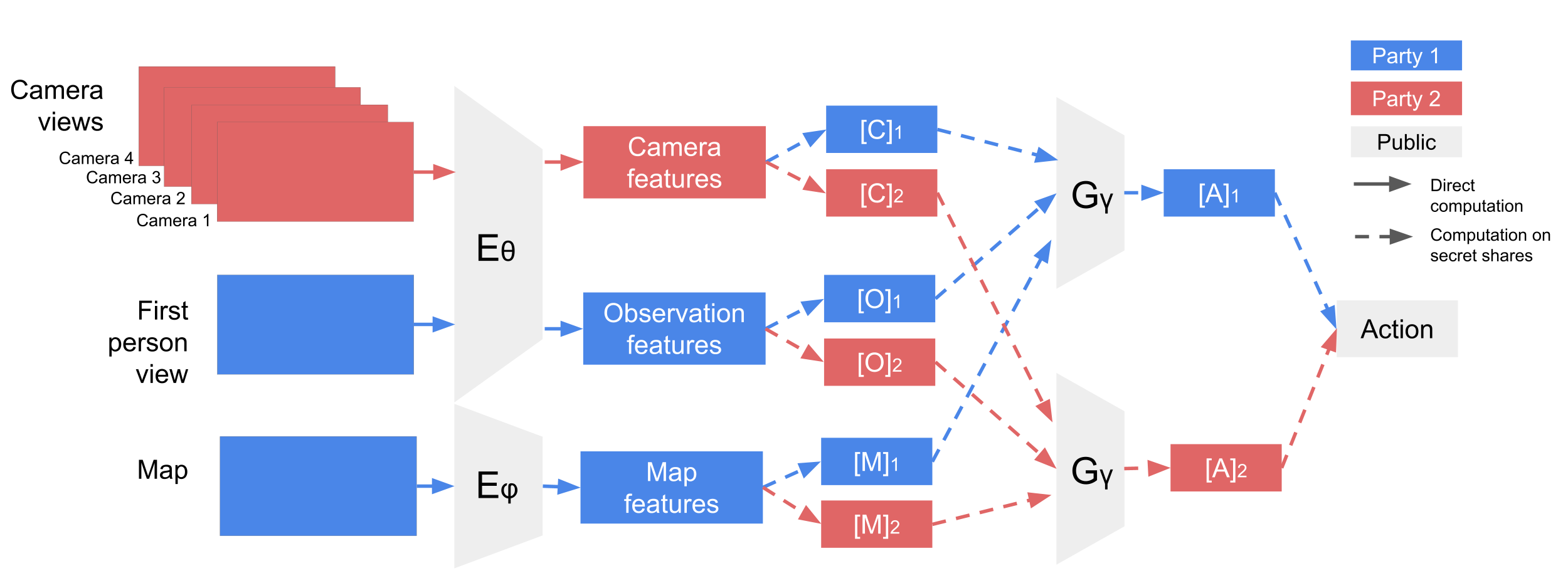

We introduce a framework for navigating through cluttered environments by connecting multiple cameras together while simultaneously preserving privacy. Occlusions and obstacles in large environments are often challenging situations for navigation agents because the environment is not fully observable from a single camera view. Given multiple camera views of an environment, our approach learns to produce a multiview scene representation that can only be used for navigation, provably preventing one party from inferring anything beyond the output task. On a new navigation dataset Obstacle World, experiments show that private multiparty representations allow navigation through complex scenes and around obstacles while jointly preserving privacy. Our approach scales to an arbitrary number of camera viewpoints. We believe developing visual representations that preserve privacy is increasingly important for many applications such as navigation.

Video

Multi-party Computation (MPC)

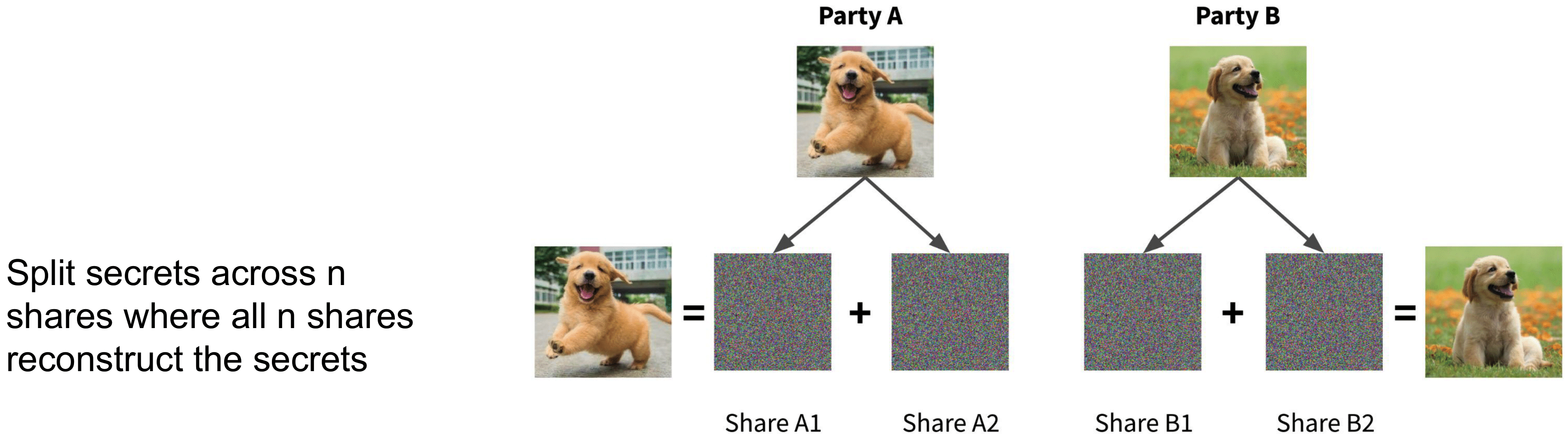

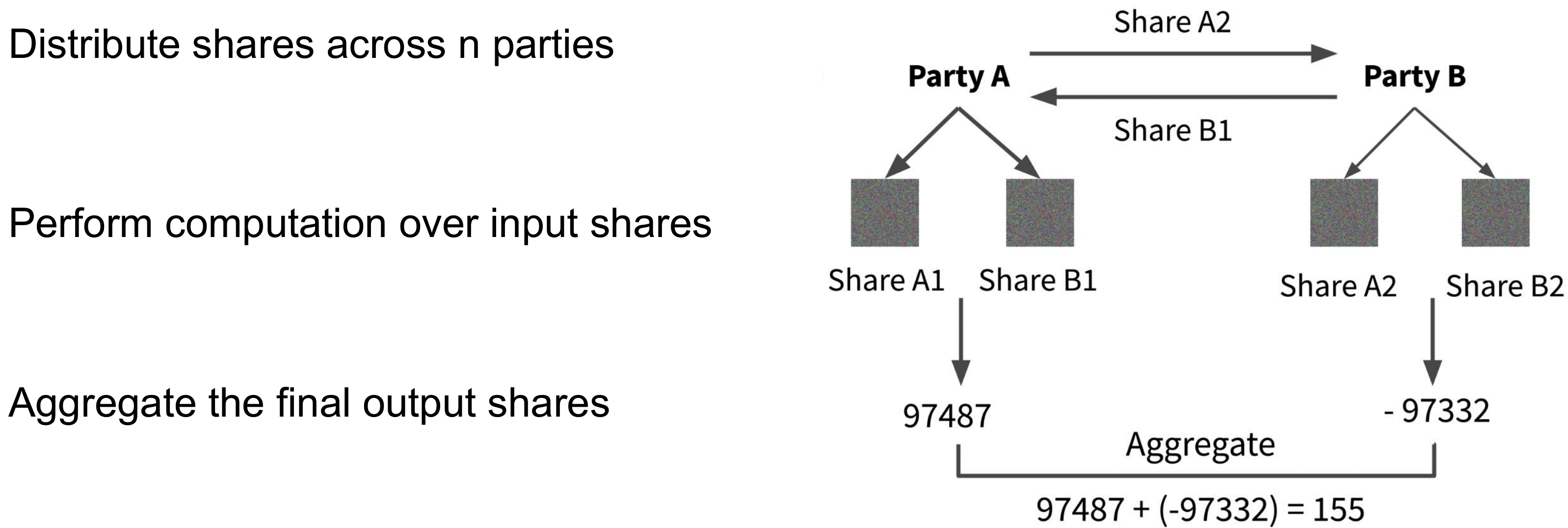

Multi-party Computation is a cryptography protocol that allows computation over private inputs where nothing is revealed other than the final output. Particularly, Shamir's secret sharing protocol is used.

Method

We present a model to utilize additional private information to achieve better navigation path planning in a privacy preserving way. Particularly, in self-driving navigation and robotic path planning contexts, secure multi-party computation and secret sharing protocols can be used to encrypt the contents from each camera such that the representation can only predict navigation steps. By definition, no parties will be able to infer additional information other than what is reasonably inferred from the final action prediction sequence output.

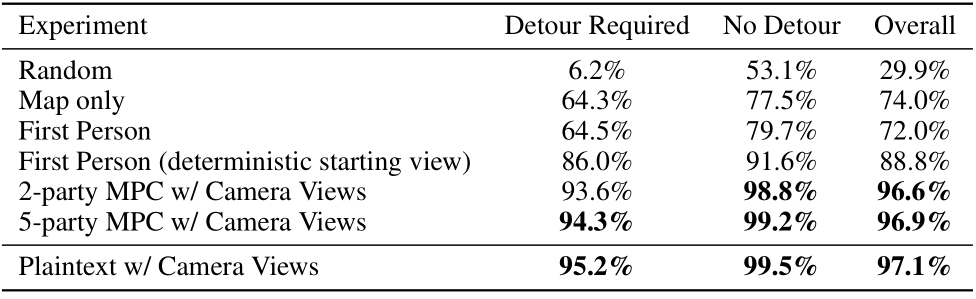

Results

Code and models

Paper

@InProceedings{lu2022visualmpc,

title={Private Multiparty Perception for Navigation},

author={Lu, Hui and Chiquier, Mia and Vondrick, Carl},

booktitle={Advances in Neural Information Processing Systems 35 (NeurIPS)},

year={2022}

}

Acknowledgements

We thank Shuran Song and Brian Smith for helpful comments. This research is based on work partially supported by the Toyota Research Institute, the NSF CAREER Award \#2046910, and the NSF Engineering Research Center for Smart Streetscapes under Award \#2133516. MC is supported by the Amazon CAIT PhD Fellowship.